GDPR video compliance in 2026 requires the absolute anonymization of biometric identifiers within visual media to meet the rigorous General Data Protection Regulation (GDPR) standards.

Modern compliance requirements have shifted from simple masking to "Forensic-Level Privacy," necessitating the use of advanced redaction software that prevents forensic de-pixelation. Failure to implement these data privacy regulations results in aggressive GDPR enforcement and substantial administrative fines.

What is GDPR and Why has Video Compliance Changed?

To understand the current landscape, one must first ask: what does GDPR stand for? The General Data Protection Regulation (GDPR) is the core GDPR compliance framework designed to protect the digital privacy of EU citizens. Since its inception, the EU GDPR has evolved; as of 2026, GDPR enforcement news today highlights a surge in penalties for "incomplete anonymization" in CCTV and social media marketing footage.

Data protection authorities now distinguish between "pseudonymization" (reversible) and "anonymization" (irreversible). For high-stakes data security, simply placing a static box over a face is no longer sufficient under GDPR principles.

Key Takeaways: The 2026 Compliance Landscape

- Biometric Focus: Facial geometry is now classified as high-risk sensitive data.

- Irreversibility: If a blur can be reversed by AI, it is a data breach.

- Edge Processing: Data processed locally is viewed more favorably by auditors.

The 2026 GDPR Video Privacy Checklist

Before publishing or storing any video containing human subjects in the EU, ensure your workflow checks off these six critical requirements:

- Lawful Basis for Processing: Have you identified your legal ground (e.g., Legitimate Interest or Consent) for capturing the footage? (Reference: GDPR Article 6).

- Data Minimization: Are you only capturing the footage necessary for your stated purpose? Avoid "overshooting" areas where privacy is expected.

- Automated Anonymization: Have you applied privacy masking to all non-consenting subjects? For high-volume footage, manual blurring is no longer considered a "sufficient technical measure."

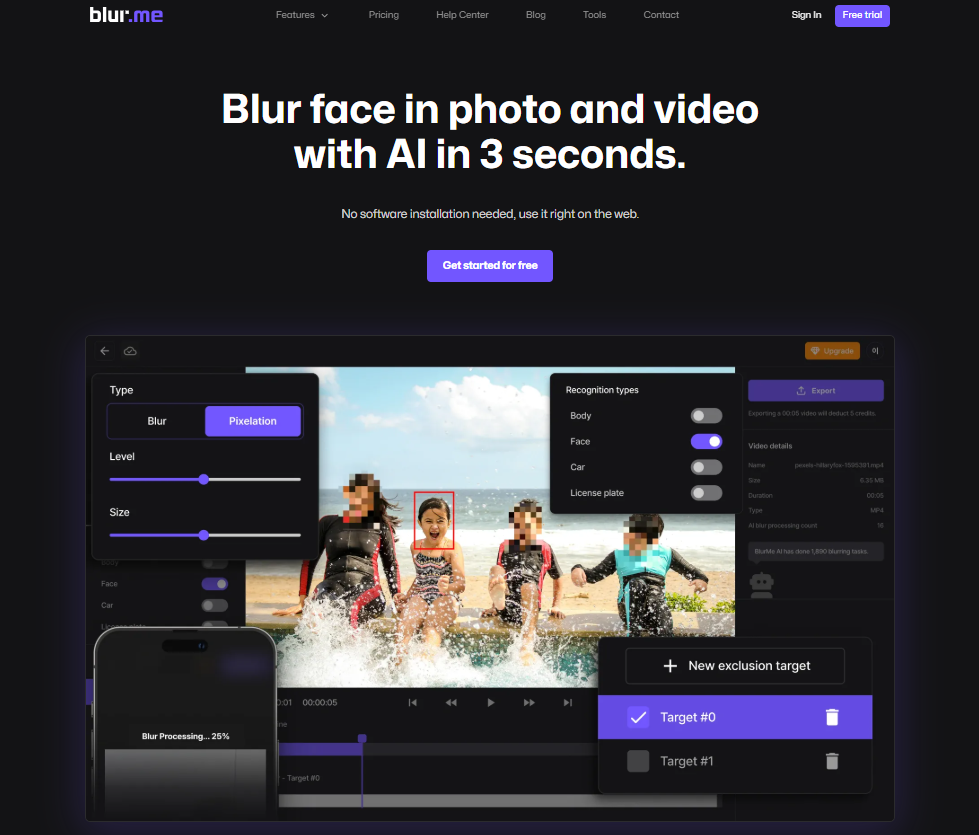

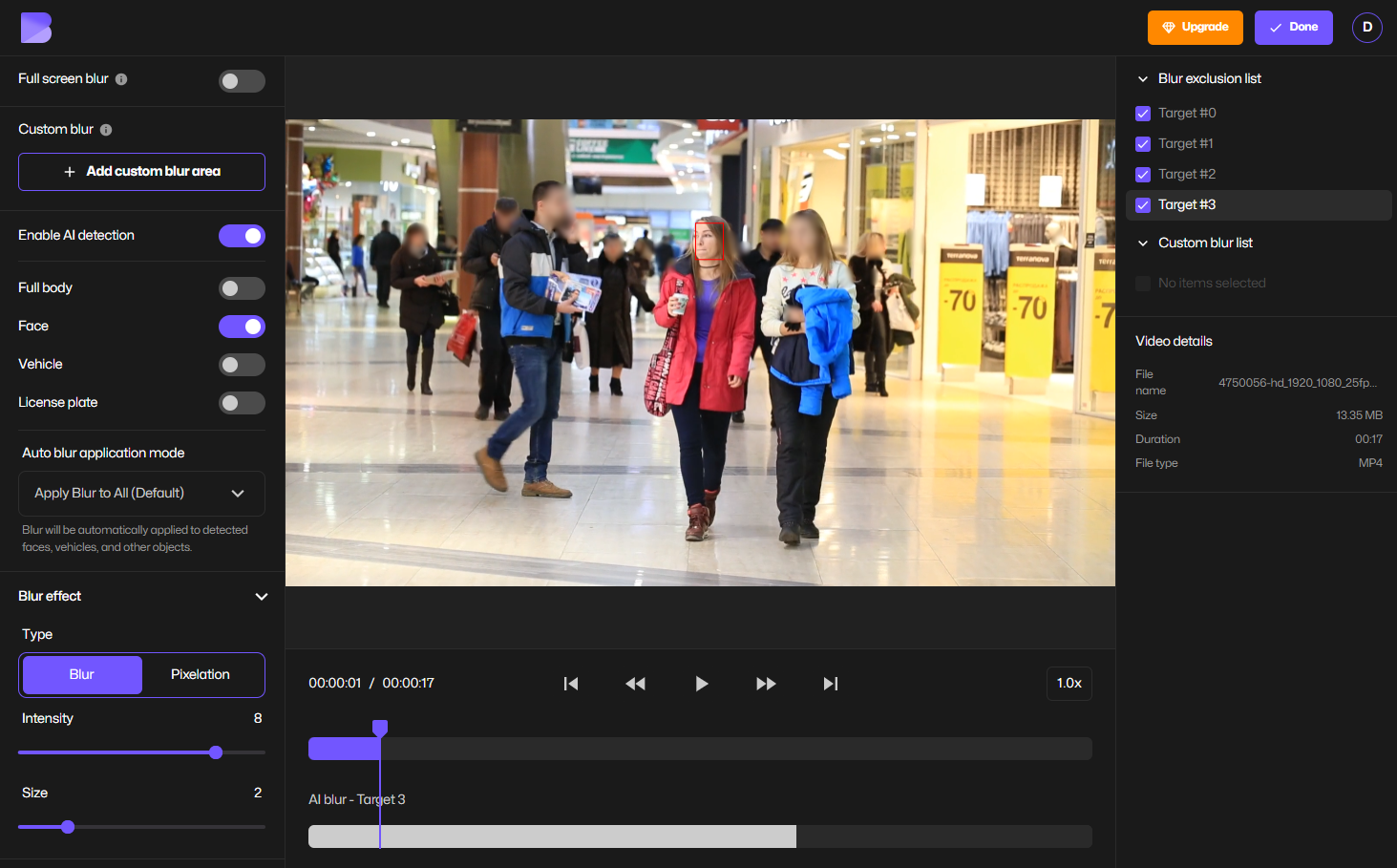

- Solution: Use BlurMe’s Automatic Face Blur to ensure no biometric data is missed.

- Forensic-Grade Redaction: Does your redaction method prevent forensic de-pixelation? Simple pixelation may not meet the 2026 "Irreversibility" standard.

- Metadata Sanitization: Have you stripped the video file of EXIF data, GPS coordinates, and device identifiers?

- Data Subject Access Request (DSAR) Readiness: If an individual asks for their data to be deleted or anonymized, do you have a workflow to process that video quickly?

Technical Nuance: The Danger of Forensic De-pixelation

A significant factor for 2026 is the rise of forensic de-pixelation. Traditional pixelation—averaging the color values of a block of pixels—is now vulnerable to "Inverse Diffusion" AI models. These models can "hallucinate" the original face with startling accuracy.

As Wojciech Wiewiórowski, the European Data Protection Supervisor (EDPS), has emphasized, anonymization is increasingly difficult to achieve as technology advances, but it remains the gold standard for data protection.

This highlights why the transition from basic pixelation to high-entropy privacy masking is no longer optional. Under the General Data Protection Regulation (GDPR), if the "means likely reasonably to be used" can reverse the blur, the data is not truly anonymized.

To ensure data protection, professional redaction software must use high-entropy Gaussian kernels or solid-fill masking. This ensures that the underlying biometric data is not just hidden, but mathematically destroyed. As noted by Information Commissioner's Office on anonymization, true anonymization must ensure the data subject is no longer identifiable by all "means likely reasonably to be used."

Security-Grade Anonymization

While manual anonymization by redaction is prone to human error—as seen in many high-profile legal filings—using a tool like BlurMe's AI Redaction Software ensures that biometric data is mathematically non-recoverable, meeting the strictest compliance requirements.

How to Comply with GDPR for Surveillance Videos in 2026

To maintain GDPR video compliance, your surveillance strategy must pivot toward Privacy by Design (Article 25). This requires integrating anonymization directly into your data workflow to prevent biometric data from becoming a liability.

Strategic Solution: Automated Redaction with BlurMe AI

Manually editing hours of CCTV footage is not only inefficient but creates "Privacy Gaps" single frames where a face might be exposed, leading to a GDPR violation.

BlurMe is engineered to meet the compliance requirements of 2026 by providing:

- Persistent AI Tracking: Our engine maintains a privacy masking lock on moving subjects across 4K surveillance feeds, ensuring no frame-skipping occurs.

- Forensic-Grade Obfuscation: Move beyond vulnerable pixelation. BlurMe uses high-entropy Gaussian kernels that are immune to forensic de-pixelation attempts.

- Bulk Redaction for DSARs: When hit with a mass access request, our Blur CCTV enterprise solution allows you to process multiple camera feeds simultaneously, significantly reducing the administrative burden of general data protection regulation (gdpr) fulfillment.

Comparison of Anonymization Methods for GDPR

Implementing the GDPR Compliance Framework in Video

When considering what GDPR aims to protect, the answer is the fundamental right to privacy. To blur video effectively, organizations must follow the CISA redaction guidelines which emphasize that "redaction is the process of removing sensitive information from a document or media so that it may be distributed."

"The shift we are seeing in 2026 is a move away from 'good enough' privacy toward 'provable' privacy," says Marcus Thorne, Senior Data Privacy Auditor. "If your privacy masking doesn't account for metadata and reflection-based re-identification, you are non-compliant."

For teams needing to blur face in video, the workflow must be automated to avoid "frame-skipping," where a face is exposed for a fraction of a second due to manual error. Utilizing BlurMe’s Blur Face in Video tool allows for persistent tracking that adheres to data privacy laws in Europe.

Key Takeaways: Technical Requirements

- Metadata Stripping: Redaction must include the removal of GPS and device EXIF data.

- Reflective Masking: Ensure windows or mirrors in the background do not leak the subject's identity.

- Audit Trails: Maintain logs of when and how a video was redacted.

The Role of AI in GDPR Enforcement

As discussed in our previous analysis of blurring video for GDPR, the general data protection regulation (GDPR) is now being enforced by automated crawlers. These bots scan public-facing videos for unmasked faces. To stay ahead, utilizing a dedicated Blur Video tool is the only way to scale compliance requirements across large archives of footage.

Q&A: Advanced GDPR Video Compliance

Q: Does GDPR apply to videos filmed in public?

A: Yes. Under the EU GDPR, capturing and storing identifiable images of individuals in public spaces constitutes processing personal data. GDPR principles require a lawful basis for this processing, often necessitating privacy masking if consent is not obtained.

Q: Can AI truly reverse a blurred face?

A: Through forensic de-pixelation, AI can often reconstruct features from low-resolution pixelation. To prevent this, data security standards now recommend high-variance blurring or solid masking that leaves no original pixel data behind.

Q: What are the specific compliance requirements for CCTV?

A: CCTV footage must follow the GDPR compliance framework, which includes "privacy by design." This means automatically applying redaction software to any footage before it is shared with third parties or stored in non-secure environments.